In 2025, artificial intelligence is set to redefine industries and unlock new possibilities we’re only beginning to imagine. Superintelligent systems transforming problem-solving on a global scale, small language models making AI more efficient and accessible, and physical AI, like autonomous robots, taking on tasks once thought impossible. From advancements in personalized healthcare to AI-generated music and video, AI’s future continues to be extraordinary.

In this blog, we’re diving into three bold predictions that showcase how AI will shape our world in 2025—and the innovators driving these changes.

1. The Rise of Physical AI

Picture this: advanced robotic surgery systems performing delicate operations with greater precision than ever before, AI-powered drones helping firefighters combat wildfires by providing real-time situational updates, and autonomous vehicles seamlessly managing entire delivery ecosystems. By 2025, physical AI—intelligent systems capable of navigating and interacting with the real world—will begin scaling across industries, reshaping how we approach healthcare, logistics, manufacturing, and emergency management.

In healthcare, robotic surgery systems are set to expand their capabilities dramatically. Future systems will integrate AI and machine learning to analyze patient data, predict complications, and assist surgeons in real-time. We will start to see enhanced imaging technologies, such as high-resolution 3D and augmented reality (AR), becoming standard, providing surgeons with unprecedented precision and reducing recovery times for patients. Miniaturized robots are poised to enable less invasive procedures, reaching hard-to-access areas in neurosurgery or cardiovascular interventions. Remote surgery could also take off, connecting top surgeons to patients in underserved areas through secure, robotic-enabled platforms.

In logistics and infrastructure, we will start to see autonomous drones and vehicles scaling up significantly. Google’s Firesat system is an example of how physical AI is set to enhance disaster response, detecting wildfires as small as a classroom and sending high-resolution updates every 20 minutes. Autonomous drones are expected to expand their roles, assisting with critical infrastructure monitoring and rapid resource deployment during emergencies. In manufacturing, robotic systems powered by physical AI are set to evolve, predicting and resolving production issues before they disrupt operations.

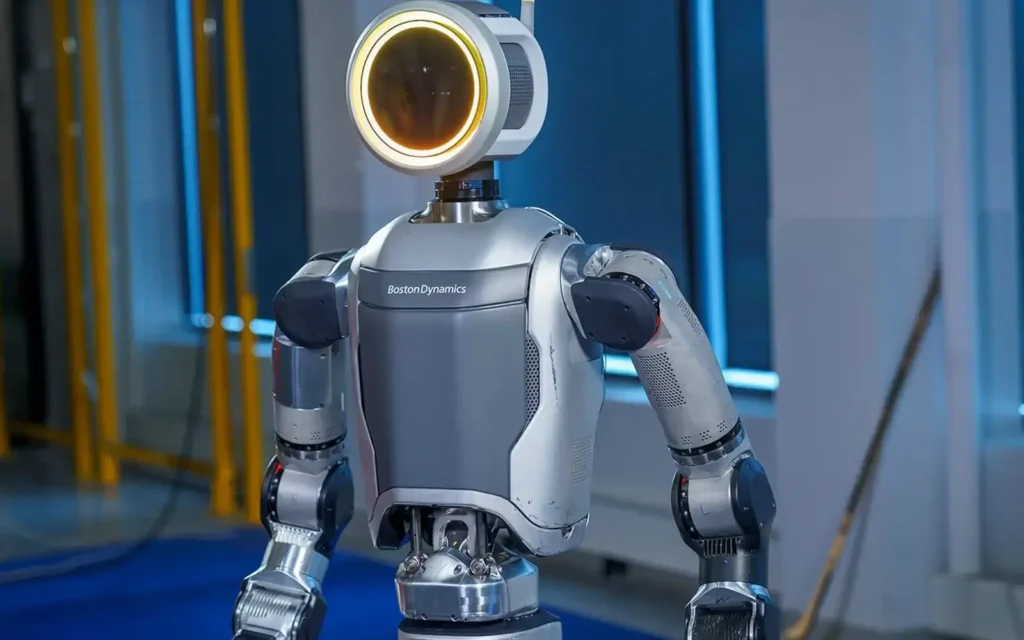

Ones to Watch: Waymo’s Robotaxi & Boston Dynamic’s Atlas Robot

Waymo is leading the autonomous vehicle race with its robotaxi service, currently completing nearly 200,000 weekly paid trips in select cities. Unlike competitors, Waymo’s self-driving cars consistently demonstrate superior safety, with data showing up to 92% fewer bodily injury claims compared to human-driven vehicles. As it prepares to expand internationally, Waymo is poised to become a dominant player in autonomous transportation.

Boston Dynamics’ Atlas robot made waves in 2024 with a fully electric reboot capable of autonomously performing complex industrial tasks. From moving engine covers independently to adapting to real-time changes, Atlas showcased unprecedented dexterity and autonomy, impressing and unnerving audiences alike. With a 2025 partnership with Toyota Research Institute, Atlas is set to advance even further, leveraging Toyota’s large behavior models to enhance multitasking and decision-making, cementing Boston Dynamics as a leader in humanoid robotics.

2. Small Language Models Take Center Stage

In 2025, small language models (SLMs) will become ubiquitous. Unlike their larger counterparts, LLM’s, which rely on massive datasets and computational resources, SLMs are smaller, faster, and more efficient, making them accessible to a wider range of users and applications. The focus is shifting toward models that are compact yet powerful enough to meet specific needs with precision.

Small language models are designed to handle domain-specific tasks with fewer parameters and lower resource demands, making them ideal for businesses that don’t require the computational heft of larger models.

For example, an SLM trained in customer service data could power an efficient chatbot, while another optimized for healthcare might summarize patient records or analyze medical notes. This shift not only reduces costs but also improves accessibility, allowing smaller companies to leverage AI without the prohibitive overhead of LLMs. SLMs are quicker to train, easier to deploy, and can often run directly on devices.

Ones to Watch: Cohere Health & Hugging Face’s SmolLM

Cohere Health uses small language models to pull detailed and accurate information from medical records. These models help identify important data like lab results, dates, and related details, making it easier and faster to approve medical service requests while reducing errors.

Hugging Face’s new small language model, SmolLM, is designed to run directly on mobile devices, addressing key challenges like data privacy and latency. With three sizes—135 million, 360 million, and 1.7 billion parameters—SmolLM brings powerful AI processing to edge devices, enabling faster, more private applications without relying on constant cloud connectivity. This innovation opens doors for advanced mobile features, from real-time language translation to personalized AI assistants.

3. The End of Dark Data

Industries are sitting on an overwhelming 120 zettabytes of dark data—unstructured information stored in formats like documents, images, videos, and audio. While we’ve developed the technology to collect and store this data in vast “data lakes,” traditional systems have struggled to process or analyze it effectively. By 2025, a convergence of AI advancements will finally enable businesses to unlock this resource, transforming dark data into actionable insights that drive innovation and efficiency across industries.

What makes 2025 different? A new generation of domain-specific multimodal AI models is emerging. These models are fine-tuned for industries like healthcare, manufacturing, and retail, allowing them to interpret complex, nuanced data. For instance, a healthcare-focused model might analyze medical imaging alongside patient histories, while a manufacturing model could integrate sensor data with maintenance logs to predict failures. This tailored approach ensures that AI can make sense of data that was previously too complex or fragmented to analyze.

At the same time, advancements in real-time processing are changing the game. Improvements in edge computing and energy-efficient AI chips will allow businesses to analyze dark data as it’s generated—whether that’s a stream of video footage, audio logs, or sensor outputs—enabling immediate action. Companies will no longer need to wait for lengthy post-collection processing, accelerating insights and decision-making.

One to Watch: NVIDIA’s Jetson Series for Real-Time AI

NVIDIA’s Jetson series is setting the standard for real-time AI at the edge, enabling businesses to process streaming video, sensor data, and audio logs as they’re generated. With energy-efficient designs optimized for edge computing, Jetson platforms allow industries like logistics and manufacturing to bypass traditional cloud-based latency, making instant insights and on-the-spot decision-making a reality. These capabilities are helping enterprises unlock the value of dark data with unprecedented speed and efficiency.