Massed Compute Blog

6 common data challenges when creating a machine learning model (and how to avoid them)

The goal of any data science project is turning raw data into valuable insights for [...]

Jul

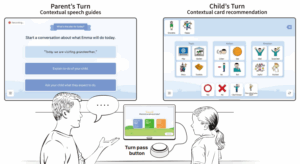

“How about you, Mom?” AI-integrated speech and language tools help nonverbal children learn to converse.

Advancements in technology and science, especially in AI-powered speech tools, in the last two decades [...]

Jul

What are AI tokens and why are they the new AI metric?

Just like ticket sales measure the success of a concert or page views measure the [...]

Jun

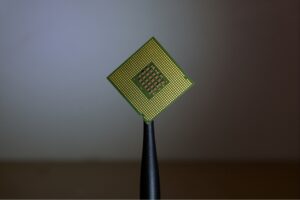

Why NVIDIA’s chips dominate the AI market

NVIDIA is the current leader in the global artificial intelligence (AI) chip market and is [...]

Jun

How to understand and read GPU benchmarks correctly

GPU (Graphics Processing Unit) benchmark comparisons are used to measure the performance of a GPU. [...]

Jun

Is my business ready for AI?

When people talk about one of the major appeals of artificial intelligence (AI) for businesses, [...]

Jun

Is GPU computing suitable for big data analytics?

Today, anyone with a basic computer can perform powerful data analysis and extract valuable [...]

May

How is AI Transforming Customer Service?

Thanks to the widespread accessibility of technology, companies have discovered new and revolutionary ways to [...]

May

January Roundup: What’s New in AI Developer Tools

As AI and development tools continue to evolve, January brought several exciting advancements designed to [...]

Mar

How RAG Unlocks Search for AI Models

We’ve all experienced AI hallucinations—those moments when a chatbot or AI assistant confidently provides an [...]

Mar

Is DeepSeek a threat or an opportunity for NVIDIA’s chips?

The launch of DeepSeek, an open-source artificial intelligence model developed in China, generated a wave [...]

Mar

Our Favorite Mindblowing AI Predictions for 2025

In 2025, artificial intelligence is set to redefine industries and unlock new possibilities we’re only [...]

Jan

3 ways to integrate AI into your workflow

Artificial Intelligence (AI) is no longer a futuristic concept limited to tech giants. It has [...]

Jan

How does a virtual desktop interface make AI development easier?

When developing AI, having access to efficient and powerful tools helps move projects forward in [...]

Jan

What are cloud AI developer services?

Until recently, getting involved in AI development required expensive hardware and deep technical expertise—this kept [...]

Dec

What is GPU cloud computing?

GPUs (Graphics Processing Unit) outperform CPUs in tasks like AI, machine learning, 3D rendering, and [...]

Dec

Why is Nvidia considered one of the best AI companies?

Since its founding in 1993, NVIDIA has gone on an impressive journey. It’s evolved from [...]

Nov

Why GPU Benchmarks Matter for Rentable GPUs

In today’s tech world, the high demand for powerful Graphics Processing Units (GPUs) makes renting [...]

Nov

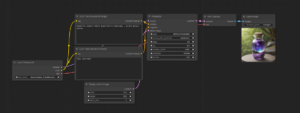

NEW – Fully Prepared ComfyUI Workflows

ComfyUI stands out as a premier application in the realm of image-based Generative AI, boasting [...]

Nov

NVIDIA GPU Display Driver Security Vulnerability – October 2024

NVIDIA recently posted a security bulletin that illustrates several critical vulnerabilities found with NVIDIA drivers. [...]

Nov

Impact of updated NVIDIA drivers on vLLM & HuggingFace TGI

If you are building a service that relies on LLM inference performance, you want to [...]

Oct

Want to build a custom Chat GPT? The top cloud computing platforms to create your own LLM

Want to Build a Custom Chat GPT? Here’s How to Pick the Best Cloud Computing [...]

Sep

What is Hacktoberfest and is it good for beginners?

Hacktoberfest is an annual event that runs throughout October, celebrating open-source projects and the community [...]

Sep

What is AI inference VS. AI training?

When it comes to Artificial Intelligence (AI) models, there are two key processes that allow [...]

Aug

What is generative AI and how can I use it?

While it may seem that AI is a recent phenomenon, the field of artificial intelligence [...]

Aug

Advantages of Cloud GPUs for AI Development

Artificial Intelligence (AI) development has become a cornerstone of innovation across numerous industries, from healthcare [...]

Aug

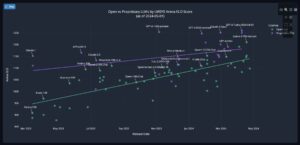

LLama 3.1 Benchmark Across Various GPU Types

Figure: Generated from our Art VM Image using Invoke AI Previously we performed some benchmarks [...]

Jul

Maximizing AI efficiency: Insights into model merging techniques

What’s a great piece of advice when you venture on a creative path that requires [...]

Jul

NVIDIA Research predicts what’s next in AI, from better weather predictions to digital humans

NVIDIA’s CEO, Jensen Huang, revealed some of the most exciting technological innovations during his keynote [...]

Jul

How do I start learning about LLM? A beginner’s guide to large language models

In the era of Artificial Intelligence (AI), Large Language Models (LLMs) are redefining our interaction [...]

Jun

LLama 3 Benchmark Across Various GPU Types

Update: Looking for Llama 3.1 70B GPU Benchmarks? Check out our blog post on Llama [...]

Jun

GPU vs CPU

Often considered the “brain” of a computer, processors interpret and execute programs and tasks. In [...]

May

One-Click Easy Install of ComfyUI

ComfyUI provides users with a simple yet effective graph/nodes interface that streamlines the creation and [...]

May

Open Source LLMs gain ground on proprietary models

Recently, there have been a few posts about how open-source models like Llama 3 are [...]

May

Best Llama 3 Inference Endpoint – Part 2

Considerations Testing Scenario Startup Commands Token/Sec Results vLLM4xA600014.7 tokens/sec14.7 tokens/sec15.2 tokens/sec15.0 tokens/sec15.0 tokens/secAverage token/sec 14.92 [...]

Apr

Leverage Hugging Face’s TGI to Create Large Language Models (LLMs) Inference APIs – Part 2

Introduction – Multiple LLM APIs If you haven’t already, go back and read Part 1 [...]

Mar

Leverage Hugging Face’s TGI to Create Large Language Models (LLMs) Inference APIs – Part 1

Introduction Are you interested in setting up an inference endpoint for one of your favorite [...]

Feb

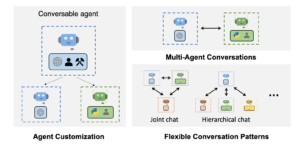

AutoGen with Ollama/LiteLLM – Setup on Linux VM

In the ever-evolving landscape of AI technology, Microsoft continues to push the boundaries with groundbreaking [...]

Nov