When it comes to Artificial Intelligence (AI) models, there are two key processes that allow it to generate outputs and or perform predictions: AI training and AI inference.

Below we cover some common questions about the two.

What is AI training?

In the AI training phase of a machine learning model’s life cycle, you typically gather data and upload it into your model using code. The model then processes this data, adjusts its parameters, and learns to perform specific tasks.

To use an analogy, we can think of AI training as a student studying for an exam. In this study phase, the student reads books, takes notes, watches videos, searches online for information on the subject matter and does practice tests. In other words, the student absorbs and processes information to learn what to answer on an exam.

How can I upload data into my model for AI training?

Most developers use open-source machine learning frameworks to upload their data and train their machine learning model. These libraries with pre-written code provide the infrastructure to run an AI model and develop the ability to predict outcomes, identify patterns and understand the data.

Two of the most common machine learning frameworks are TensorFlow and PyTorch. However, the framework you eventually choose will depend on your workload.

What is AI inference?

In the AI inference phase, the pre-trained ML model deploys code to implement an algorithm (i.e., perform the actions it’s been trained on) and make predictions.

Think of the AI inference phase as the ‘exam day’ for the pre-trained model, where it takes action by implementing the algorithm and answering questions in the form of predictions.

For a machine learning model to answer or perform tasks correctly, it can’t simply “copy and paste” memorized information from the training phrase. It has to apply knowledge and make deductions to formulate coherent and precise answers—abilities that were once exclusive to human intelligence.

Is AI inference the same as training?

AI inference and training are not the same thing, though they do go hand in hand.

It’s through AI training that you run your machine learning model on large amounts of data and test and validate the model.

For a machine learning model to be able to infer based on new inputs, it must use the knowledge it acquires during the AI training phase.

AI training is a longer phase and requires more computational resources than AI inference.

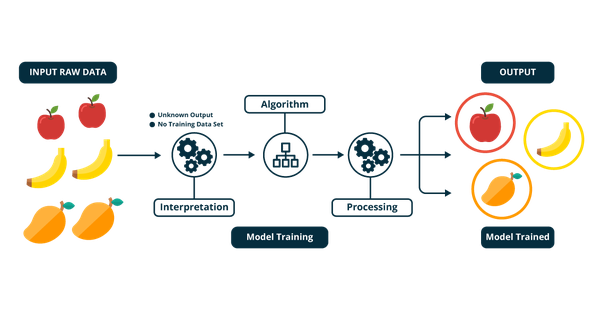

Simplified version of AI model training

What is the role of GPUs in AI training and AI inference?

Machine Learning GPUs (Graphical Processing Units) are computer processors designed to perform several mathematical calculations simultaneously.

That’s why they process data faster and with greater energy efficiency than CPUs (Central Processing Units).

GPUs deliver the best performance for AI training and inference for two reasons:

- Training AI models, especially deep neural networks, involves performing many complex mathematical operations simultaneously.

- AI models are generally trained with large volumes of data.

Why does AI training require more computational resources than AI inference?

Just as a human student may spend hours studying to learn the material to prepare for an exam, an AI model also requires significant effort to comprehend new information. However, once the information is acquired, applying it is simple.

AI training involves discovering patterns through “trial and error,’ while analyzing large volumes of data. The AI model adjusts the parameters in each neuron (the weights and biases) so that, over time, it can provide the correct outputs.

This can make AI training a very long process, requiring lots of energy and GPUs.

Is inference faster than training? It is.

AI Inference uses the model that’s already been pre-trained and deployed. It does not have to go through the entire training process each time to provide an output.

How much machine learning GPUs are needed for AI training and AI inference?

How many GPUs you need depends on the project. You might start training your model with a standard GPU, like the one found on your desktop or laptop, and find that the training is going too slow. In that case, you can upgrade to a more powerful GPU or add more GPUs to your system.

The scalability of GPUs allows you to adjust the performance and capacity of your AI model according to your needs, whether by increasing the processing power of a single GPU or adding more GPUs.

On-demand GPU providers like Massed Compute allow you to rent GPUs as needed, providing a scalable solution without the need to invest in your own hardware. If you want to try building your own AI language learning model, check out our marketplace to rent a GPU Virtual Machine. Use the coupon code MassedComputeResearch for 15% off any A6000 or A5000 rental.