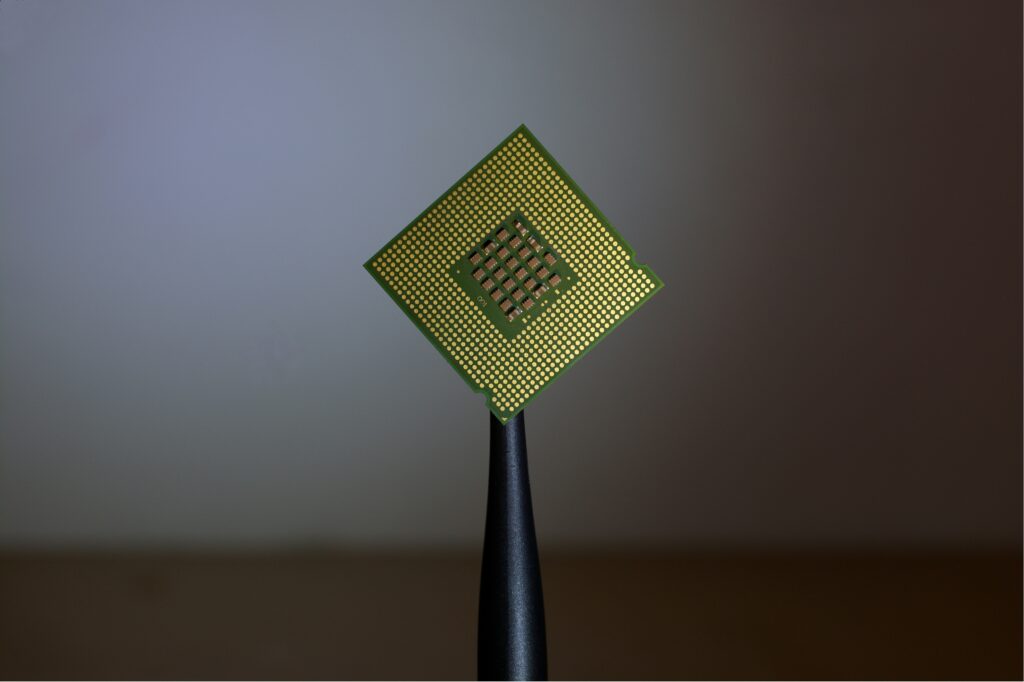

Computational power can often be the limiting factor between an idea and its realization. Traditional research setups that rely on on-premise workstations or small-scale servers can slow progress due to hardware limitations. Cloud GPU solutions, however, are transforming how researchers work by providing scalable, high-performance computing on demand. Here are 7 ways cloud GPUs save […]

Author Archives: Massed Compute

Today, big data and modern databases are the backbone of information management. Did you know that before the rise of cloud computing or AI analytics, the roots of these technologies can be traced back to libraries, census offices and one interesting machine: the UNIVAC I? Let’s talk about the early evolution of data centers and […]

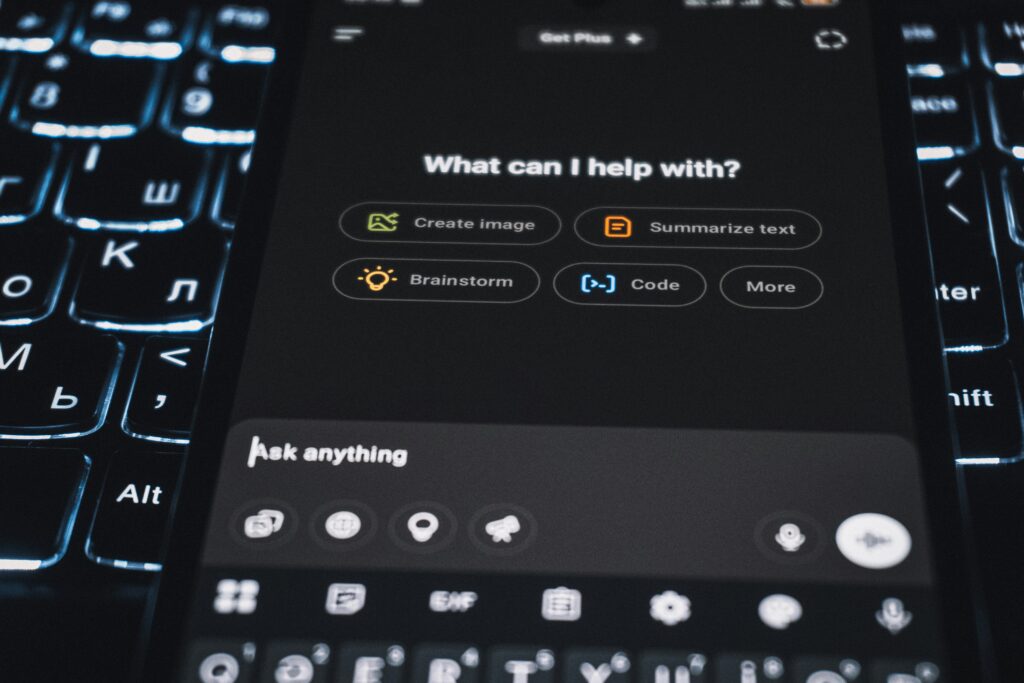

The launch of GPT-5 from OpenAI last month represents a major leap in how artificial intelligence(AI) interacts with users, making it feel more like a personal assistant than ever before. Unlike earlier versions, where users had to manually select a model like GPT-3.5 or GPT-4 depending on their needs, GPT-5 automatically determines the best approach […]

If you’ve read even a little about artificial intelligence (AI), you’ve probably seen the term parameters in headlines about massive models with billions of them. You’ve probably read or heard phrases like “a neural network can contain up to millions of parameters,” or “calculating parameters requires significant computational power from a GPU.” Parameters are the […]

Massed Compute has reached a significant milestone in our mission to accelerate AI innovation. We have secured up to $300 million in equity and revenue share financing from Digital Alpha and a strategic collaboration with Cisco. This investment and partnership will significantly expand our AI cloud footprint, enabling us to meet growing market demand for […]

Expensive on-location shoots, elaborate studio sessions, and green screen setups have long been the backbone—and bottleneck—of photoshoots, demanding high budgets, prolonged post-production, and creative constraints. Not anymore. AI background image generators cut through this complexity by instantly replacing these cumbersome processes with tailor-made digital scenes. In seconds, they transform raw images, eliminating the need for […]

Artificial intelligence is reshaping how businesses solve problems and improve efficiency. From optimizing warehouse operations to streamlining customer interactions, companies across industries are leveraging AI to address challenges and drive innovation. We’ll explore four real-world examples of organizations successfully implementing AI to transform operations and achieve measurable results. Afterward, we’ll discuss how to identify opportunities […]

The goal of any data science project is turning raw data into valuable insights for your company. This means detecting patterns that give accurate predictions, and, consequently, making better decisions. Let’s look at 6 of the most common problems data scientists face when gathering data to create machine learning models. Data problem #1: Unrepresentative samples […]

Just like ticket sales measure the success of a concert or page views measure the popularity of a website, tokens have become the go-to metric for artificial intelligence (AI) usage. But what exactly is a token and why are AI companies and investors counting them? While the meaning varies depending on the context, “tokenization” involves […]

NVIDIA is the current leader in the global artificial intelligence (AI) chip market and is playing a pivotal role in the accelerated computing revolution. Jensen Huang, the CEO of NVIDIA, has been traveling across Europe over the past week, striking AI partnerships and reinforcing the company’s commitment to expanding its influence across the continent. With […]