In Part 1, we looked at how tools like Ollama, LM Studio, and Text Generation WebUI perform as an inference endpoint for Llama 3 – 70B. If you haven’t had a chance to review that, go back and check it out. The test we performed had great results. However, we had to use a 4-bit quantized version of the model. In this test, we wanted to use two powerful inference engines to serve the full unquantized version of the Llama 3 – 70B.

Considerations

To perform this test, we had to do two extra steps. The two steps are to create a free Hugging Face account and accept the Meta Llama 3 license agreement.

Typically, you can serve any model from Hugging Face. For the Llama 3 models, you must accept the Meta license agreement for the model. Accepting the license is very straightforward. First, navigate to the model card on Hugging Face, make sure you are logged in, accept the terms, and request access to the model. After you are approved, you can then download the model.

Testing Scenario

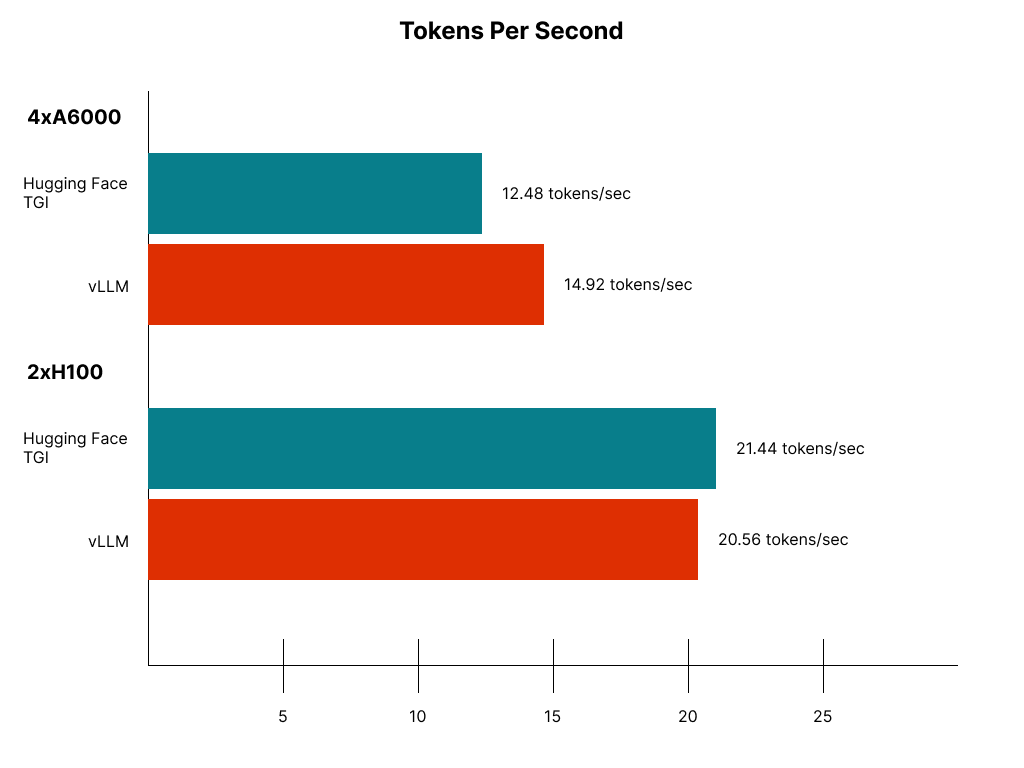

We ran tests on two different GPU types and configurations. The first was on 4xA6000, and the second we used a 2xH100. We tried to load the models on a 2xA6000 and 1xH100, but the model is slightly too large for those configurations and requires more vRAM.

Each test started with a fresh Virtual Machine using the base Ubunto OS. We used each engine’s Docker commands to start and serve the model. Once the Docker container served the model, we used a cURL command to test.

For our test, we made five requests to each engine with the same prompt: “Why is the sky blue?” We did not care about the accuracy of the generation we were only looking at the generated token/sec of each generation. Subsequently, the results were averaged to determine a final tokens-per-second value for each engine.

In this version of the experiment, we also included a cost-per-token analysis. Given that we have examined two distinct GPU types and setups, we were also interested in determining the more cost-efficient option.

Startup Commands

Here are the Docker commands for each engine

vLLM

4xA6000

“`

token={HUGGING_FACE_ACCESS_TOKEN}

docker run –runtime nvidia –gpus all -v ~/.cache/huggingface:/root/.cache/huggingface –env “HUGGING_FACE_HUB_TOKEN=$token” -p 8000:8000 –ipc=host vllm/vllm-openai:latest –model meta-llama/Meta-Llama-3-70B-Instruct -tp 4

“`

2xH100

“`

token={HUGGING_FACE_ACCESS_TOKEN}

docker run –runtime nvidia –gpus all -v ~/.cache/huggingface:/root/.cache/huggingface –env “HUGGING_FACE_HUB_TOKEN=$token” -p 8000:8000 –ipc=host vllm/vllm-openai:latest –model meta-llama/Meta-Llama-3-70B-Instruct -tp 2

“`

Hugging Face TGI

4xA6000

“`

mkdir data

volume=$PWD/data

token={HUGGING_FACE_ACCESS_TOKEN}

model=meta-llama/Meta-Llama-3-70B-Instruct

docker run –gpus all –shm-size 4g -e HUGGING_FACE_HUB_TOKEN=$token -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.4 –model-id $model –sharded true –num-shard 4

“`

2xH100

“`

mkdir data

volume=$PWD/data

token={HUGGING_FACE_ACCESS_TOKEN}

model=meta-llama/Meta-Llama-3-70B-Instruct

docker run –gpus all –shm-size 2g -e HUGGING_FACE_HUB_TOKEN=$token -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.4 –model-id $model –sharded true –num-shard 2

“`

Token/Sec Results

vLLM

4xA6000

14.7 tokens/sec

14.7 tokens/sec

15.2 tokens/sec

15.0 tokens/sec

15.0 tokens/sec

Average token/sec 14.92

2xH100

20.3 tokens/sec

20.5 tokens/sec

20.3 tokens/sec

21.0 tokens/sec

20.7 tokens/sec

Average token/sec 20.56

Hugging Face TGI

4xA6000

12.38 tokens/sec

12.53 tokens/sec

12.60 tokens/sec

12.55 tokens/sec

12.33 tokens/sec

Average token/sec 12.48

2xH100

21.29 tokens/sec

21.40 tokens/sec

21.50 tokens/sec

21.60 tokens/sec

21.41 tokens/sec

Average token/sec 21.44

Purely looking at a token/sec result, Hugging Face TGI produces the most tokens/sec on the 2xH100 configuration. However, when we consider the cost associated with each GPU setup, the narrative shifts.

Token per second chart (Higher is better)

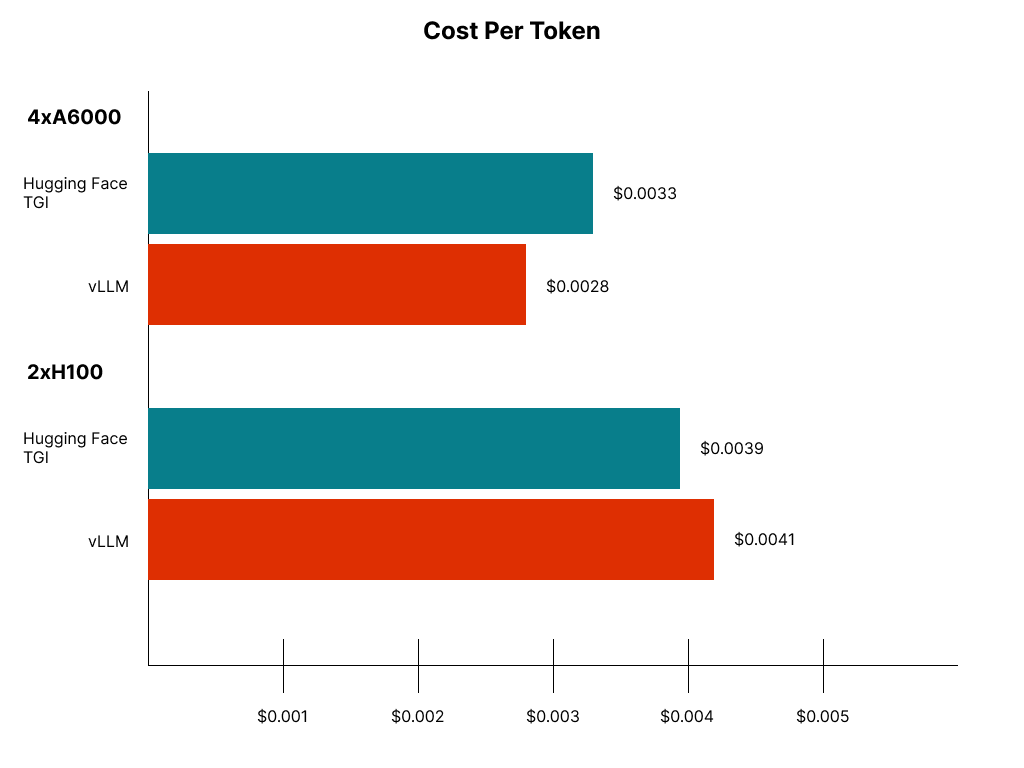

Cost Per Token Results

A 4xA6000 on our marketplace costs $2.50 per hour, and a 2xH100 is $4.98 per hour. Taking into consideration the tokens generated here are the results.

vLLM

4xA6000 – $0.0028

2xH100 – $0.0041

Hugging Face TGI

4xA6000 – $0.0033

2xH100 – $0.0039

Looking at these results, a 4xA6000 using vLLM is the most cost-effective inference engine in this test.

Cost Per Token (Lower is better)

Conclusion

After conducting several tests, we observed the performance of a completely unquantized Llama 3 70B model as a deployment endpoint for inferences. It turned out that the most cost-efficient configuration was utilizing vLLM on a 4xA6000 setup. This was an unexpected finding as we assumed a 2xH100 would reign supreme.

If you want to try and replicate these results check out our marketplace to rent a GPU Virtual Machine Sign Up and use the coupon code `MassedComputeResearch` for 15% off any A6000 rental.