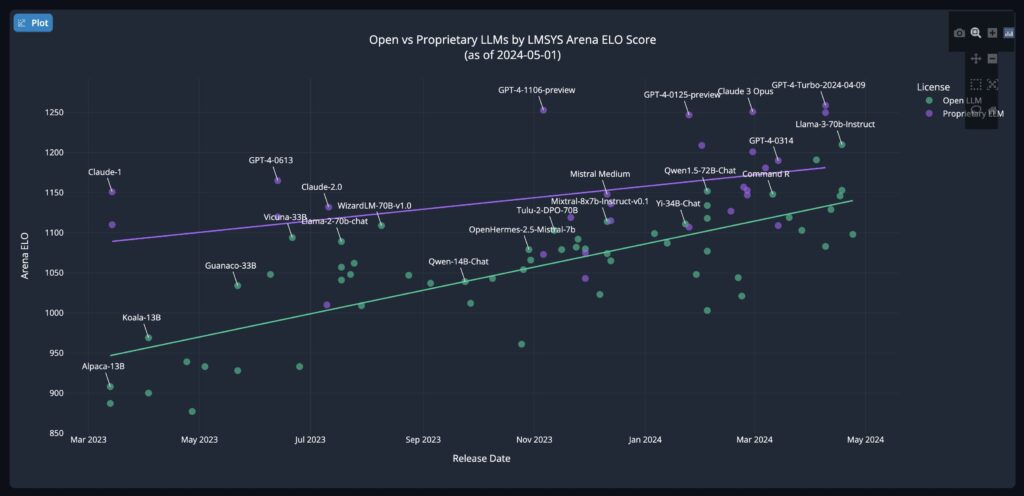

Recently, there have been a few posts about how open-source models like Llama 3 are catching up to the performance level of some proprietary models. Andrew Reed from Hugging Face created a visual representation of a progress tracker to compare various models. It is clearly showing a growing trend that open-source models are gaining ground.

Understanding the Data

First, what does this chart and the rankings even mean? The LMSYS Chatbot Arena, introduced in a blog post in May 2023, serves as a benchmark platform for assessing the performance of large language models (LLMs). To establish a fair ranking system, the developers incorporated the Elo rating system, which was initially designed to gauge the skill levels of chess players.

In the LMSYS Chatbot Arena, users can input prompts to two different models without knowing their identities and select the one they believe performed better. Based on user selections, the platform calculates the relative skill levels of the models using the Elo rating system.

This method, created for chess, determines the relative skill levels of players by comparing their win-loss records against other players. When a lower-ranked player defeats a higher-ranked one, the lower-ranked player gains more points than if they had defeated a similarly ranked opponent. Similarly, the higher-ranked player loses more points upon defeat. Over time, this system provides an accurate representation of a player’s or model’s skill level based on their performance history. By applying the Elo rating system to the LMSYS Chatbot Arena, the developers ensure a fair and unbiased evaluation of the participating LLMs.

GPU Demand

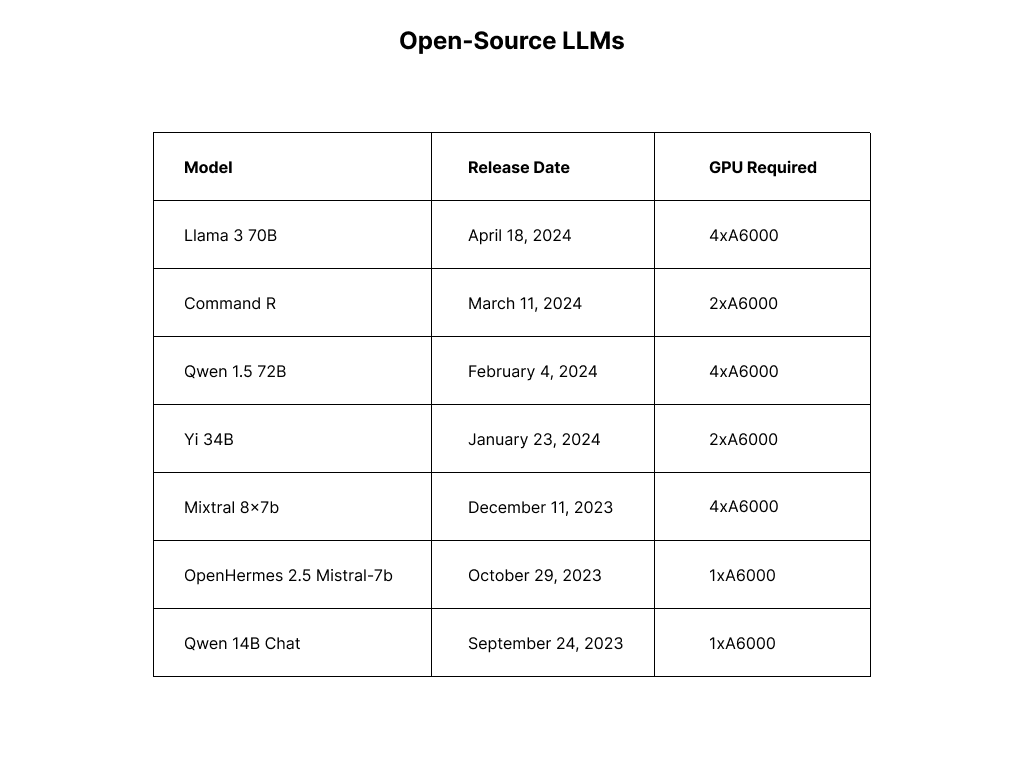

Open-source models have become increasingly GPU-hungry evident as they continue to grow in size. With the introduction of Mixture of Expert (MoE) models like Mixtral 8x7b, the demand for powerful GPUs has skyrocketed. However, there is one notable exception – Llama-3-8b. This particular model breaks the mold in terms of performance and serves as a promising indication that open-source models can catch up to their proprietary counterparts while still being mindful of GPU requirements.

Our research reveals that as open-source models have evolved, so too have the GPU requirements necessary to run them effectively. The following table provides a snapshot of popular open-source models that moved the needle. The table highlights the model release data and how many GPUs are required to serve an unquantized version of each model.

As we examined how open-source models are catching up to the performance of proprietary models, it is clear to see the best performing models are getting bigger. This means more and more GPUs are needed to run the full version of each ground breaking model. Llama 3-8b does provide some home in smaller yet powerful models. Hopefully other foundational models can continue to push open-source closer to proprietary models.

If you are looking to serve a Large Language Model check out our Cloud GPU offering. If you are looking for on-demand GPU resources you can Sign Up and use the coupon code `MassedComputeResearch` for 15% off any A6000 rental.