Update: Looking for Llama 3.1 70B GPU Benchmarks? Check out our blog post on Llama 3.1 70B Benchmarks

On April 18, 2024, the AI community welcomed the release of Llama 3 70B, a state-of-the-art large language model (LLM). This model is the next generation of the Llama family that supports a broad range of use cases. The model istelf performed well on a wide range of industry benchmakrs and offers new capabilities, including improved reasoning.

In previous blog posts, we examined well-known applications that perform inference on both quantized and non-quantized versions of Llama 3 using inference engines. We covered quantized versions in part 1 and non-quantized versions in part 2. The focus there was to look at what is the easiest, and best performance engine to serve Llama 3 as an API end point. This post looks at the next iteration of this and looks at how different GPU types perform.

The GPUs Tested

Before diving into the results, let’s briefly overview the GPUs we tested:

- NVIDIA A6000: Known for its high memory bandwidth and compute capabilities, widely used in professional graphics and AI workloads.

- NVIDIA L40: Designed for enterprise AI and data analytics, offering balanced performance.

- NVIDIA A100 PCIe: A versatile GPU for AI and high-performance computing, available in PCIe form factor.

- NVIDIA A100 SXM4: Another variant of the A100, optimized for maximum performance with the SXM4 form factor.

- NVIDIA H100 PCIe: The latest in the series, boasting improved performance and efficiency, tailored for AI applications.

Benchmarking Methodology

There are many different engines and techniques we could have used to judge performance across the various GPUs. We decided to leverage the Hugging Face Text Generation Inference (TGI) engine as the primary way to serve Llama 3. This was done for one primary reason. It is the only inference engine that we have seen provide a mechanism to benchmark.

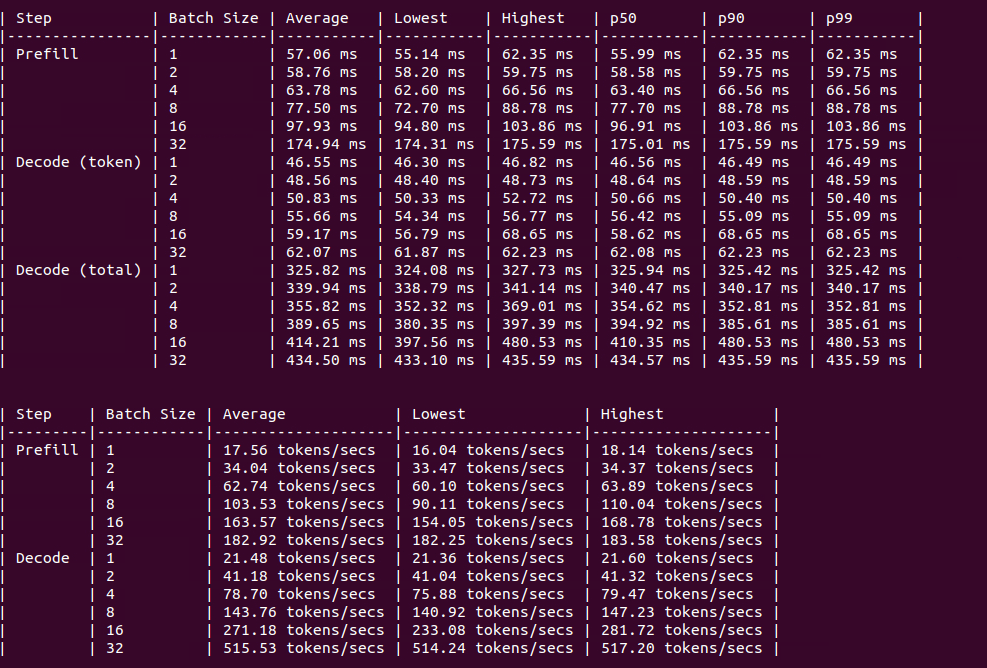

The benchmark provided from TGI allows to look across batch sizes, prefill, and decode steps. It is a fantastic way to view Average, Min, and Max token per second as well as p50, p90, and p99 results. If you want to learn more about how to conduct benchmarks via TGI, reach out we would be happy to help.

Results

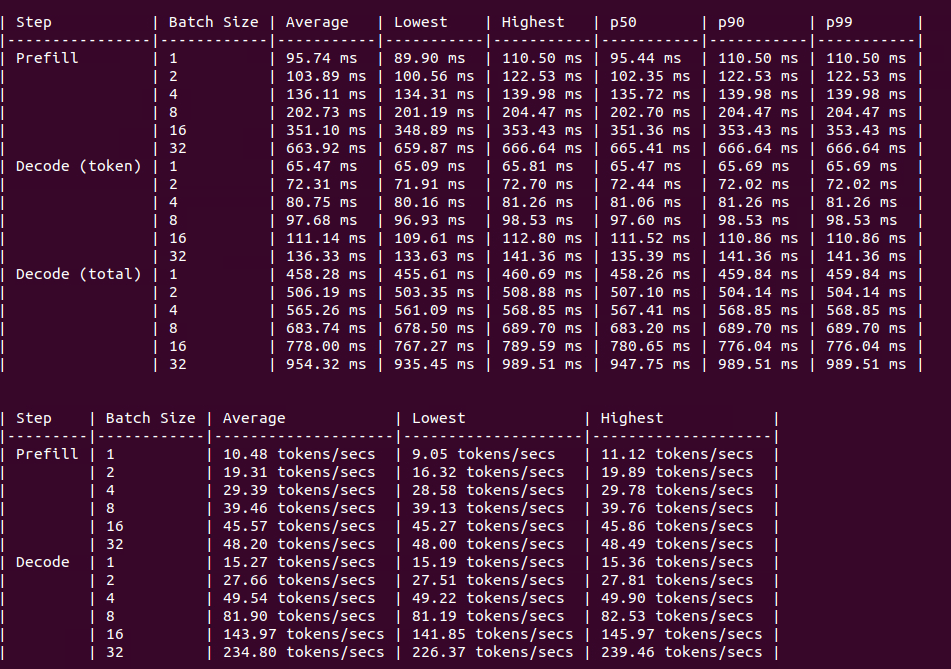

RTX A6000

Figure: Benchmark on 4xA6000

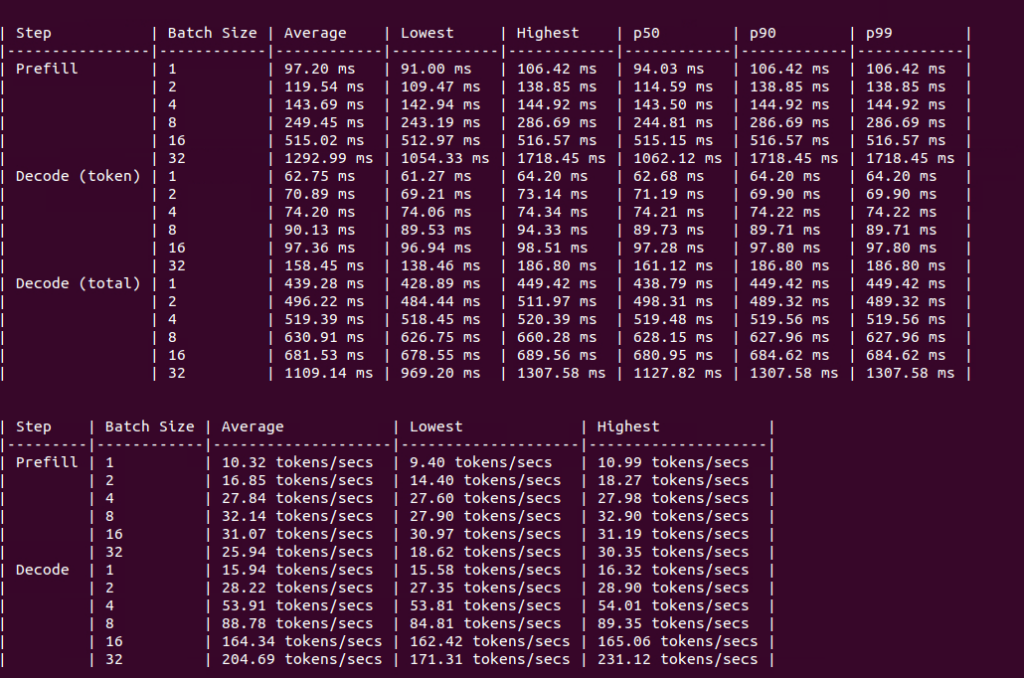

L40

Figure: Benchmark on 4xL40

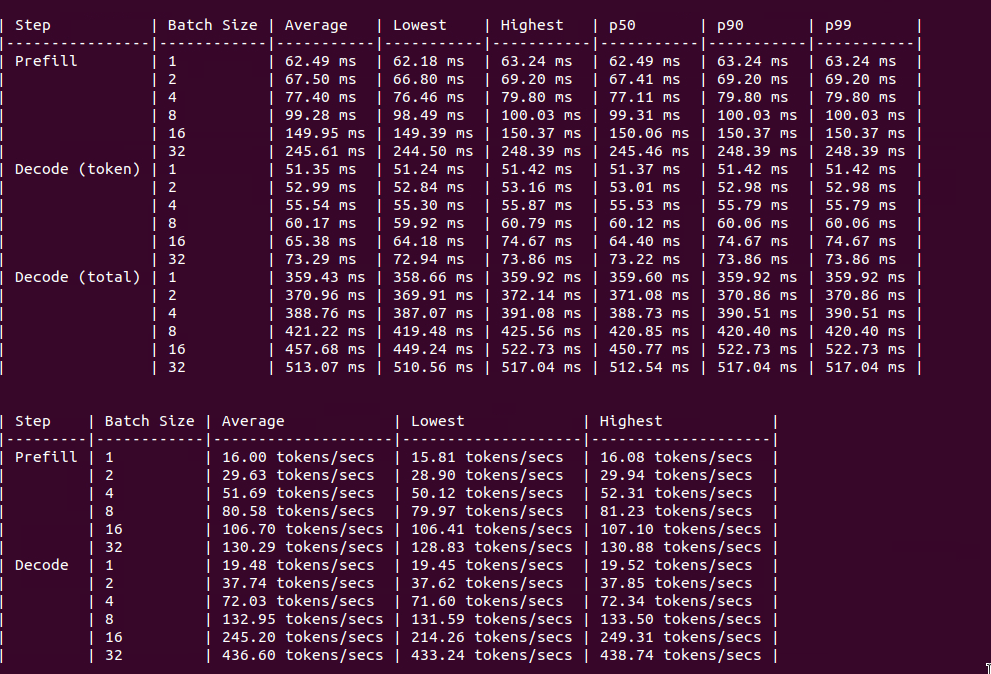

A100 PCIe

Figure: Benchmark on 2xA100

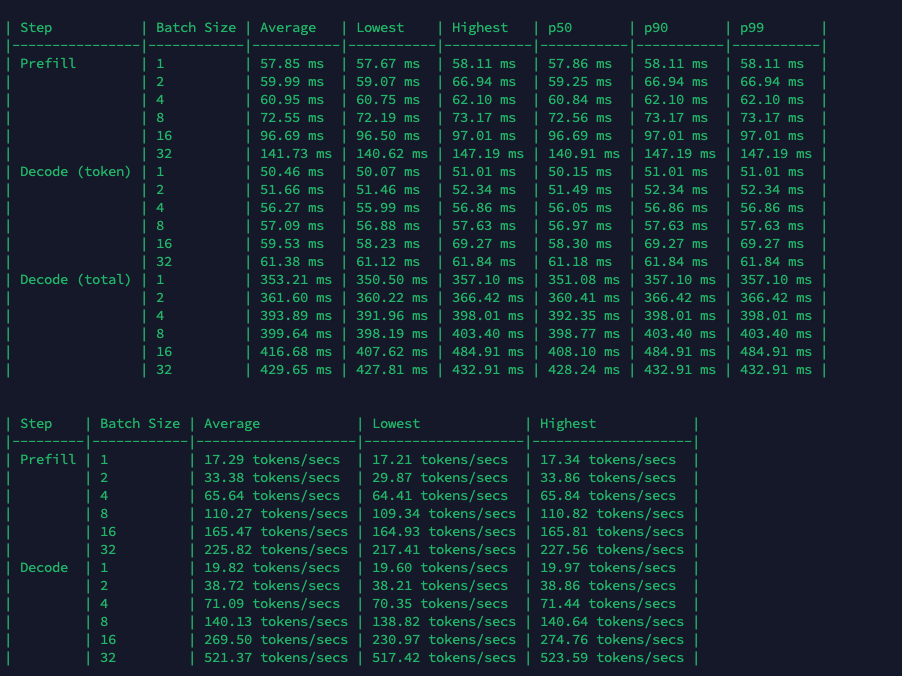

A100 SXM4

Figure: Benchmark on 2xA100

H100 PCIe

Figure: Benchmark on 2xH100

Conclusion

Hugging Face TGI provides a consistent mechanism to benchmark across multiple GPU types. Based on the performance of theses results we could also calculate the most cost effective GPU to run an inference endpoint for Llama 3. Understanding these nuances can help in making informed decisions when deploying Llama 3 70B, ensuring you get the best performance and value for your investment.

If you want to try and replicate these results check out our marketplace to rent a GPU Virtual Machine. Sign Up and within a few minutes you can have a working VM to test these results.