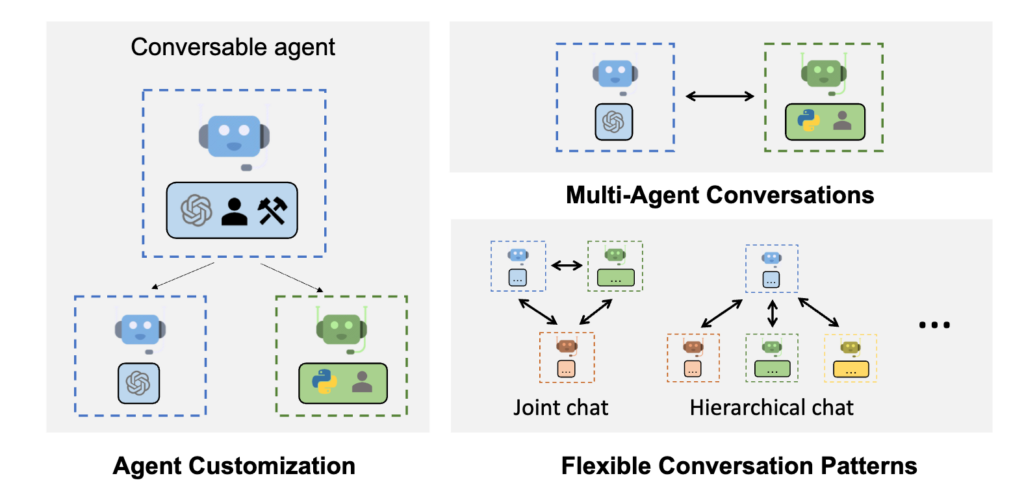

In the ever-evolving landscape of AI technology, Microsoft continues to push the boundaries with groundbreaking projects. Among these innovative endeavors is their AutoGen project. AutoGen provides multi-agent conversation framework as a high-level abstraction. With this framework, one can conveniently build LLM workflows. As developers grapple with the increasing complexity of modern software applications, AutoGen offers a promising solution to automate and expedite tasks. In this blog, we will look at how you can leverage open-source LLMs with AutoGen. We will leverage Ollama and LiteLLM to enable the use of various open source LLMs to power AutoGen.

Rent a VM Today!Tools needed

- 1x A6000 Virtual Machine

- Visual Studio Code

- Computer Terminal

- Autogen

- Ollama

- LiteLLM

Steps to get setup

A. Login to your Massed Compute Virtual Machine either through the browser or the Thinlinc client.

B. If your virtual machine already has Visual Studio Code installed skip to the next step. To Install VS Code select the menu item in the lower left corner, select the ‘Software Manager icon (1.). In that program search for ‘visual studio code’ (2.) select and download the app listed (3.)

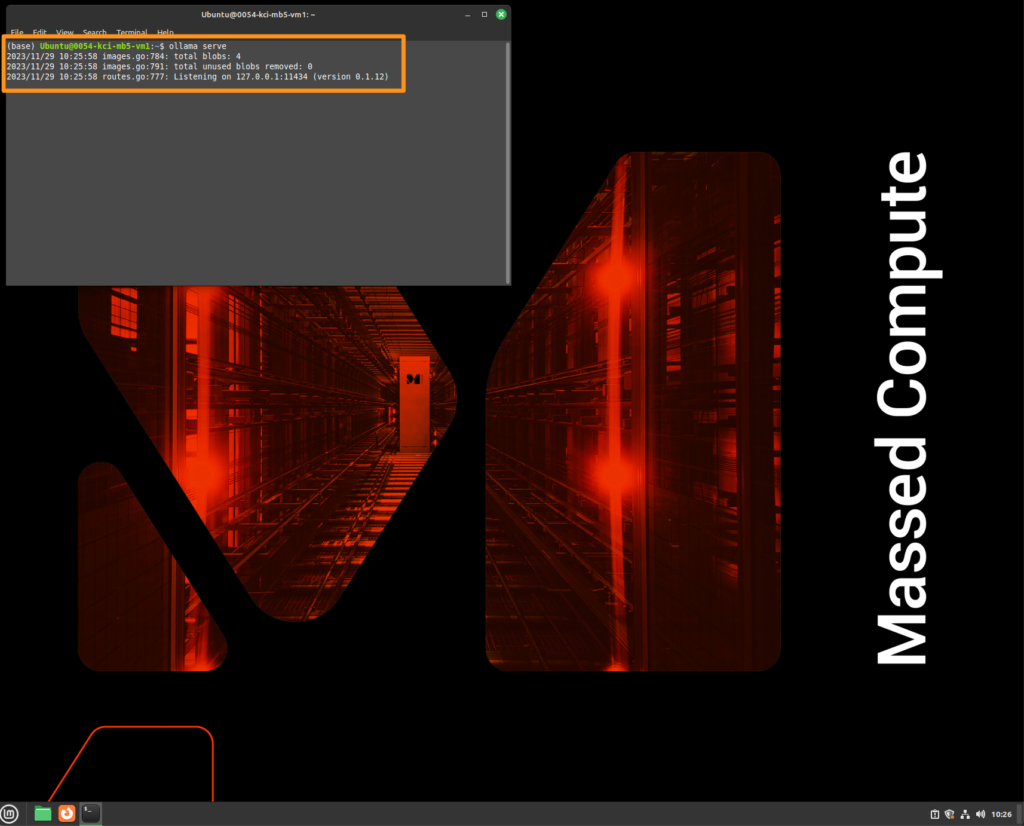

C. Open your terminal and install Ollama. Run the following command `curl https://ollama.ai/install.sh | sh` after installing ollama run the service by running `ollama serve`

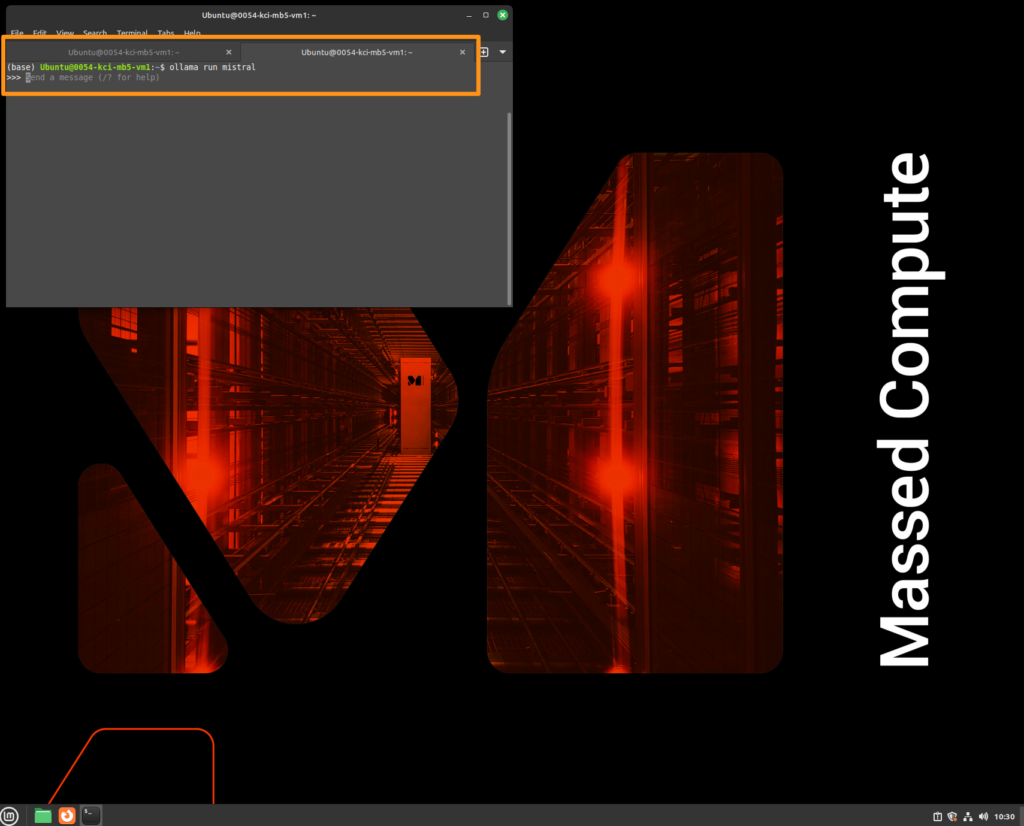

D. Once Ollama is installed and running you can open a new tab on your terminal and start a model. There are various models to choose from. Ollama’s website has them all listed on their website. For this tutorial I’ll use the Mistral model.

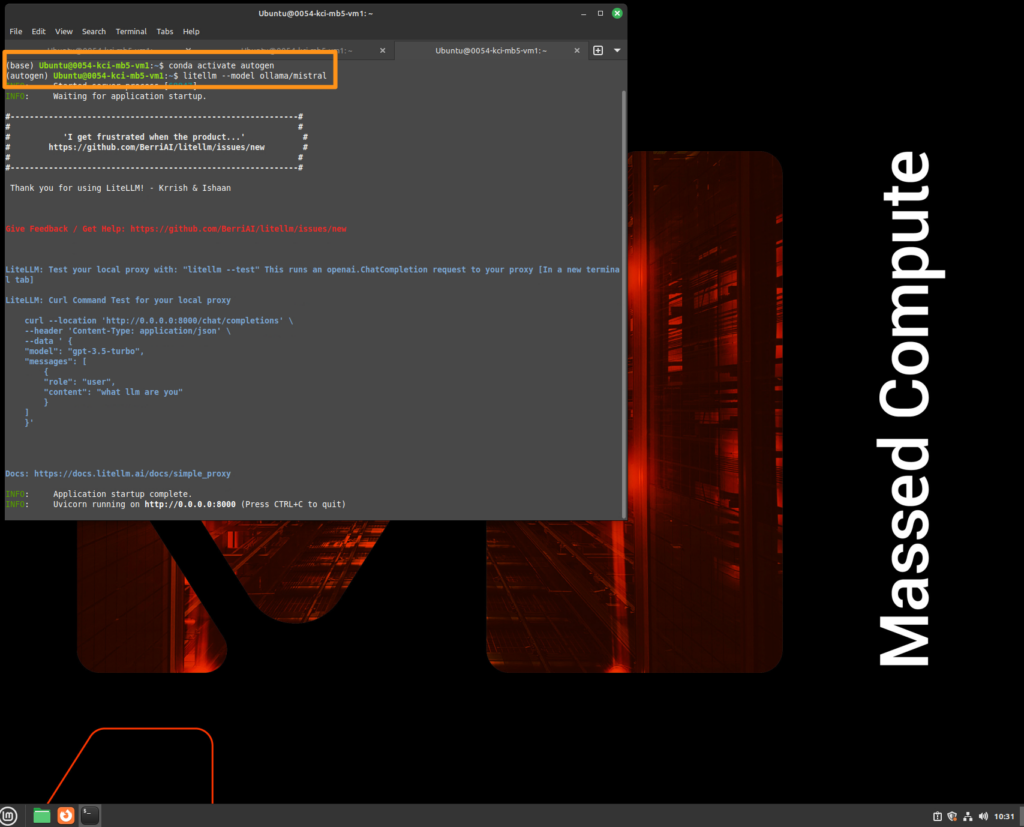

E. Now that Ollama is running and you have installed the model you are interested in, we want to create a unique environment using conda. In a new tab in your terminal create a new environment conda create -n autogen python=3.11

You may have to install conda if it is not installed already. Follow the Linux instructions from their website.

After the conda environment is created activate it by running conda activate autogen.

F. Install AutoGen and LiteLLM using python -m pip install pyautogen litellm

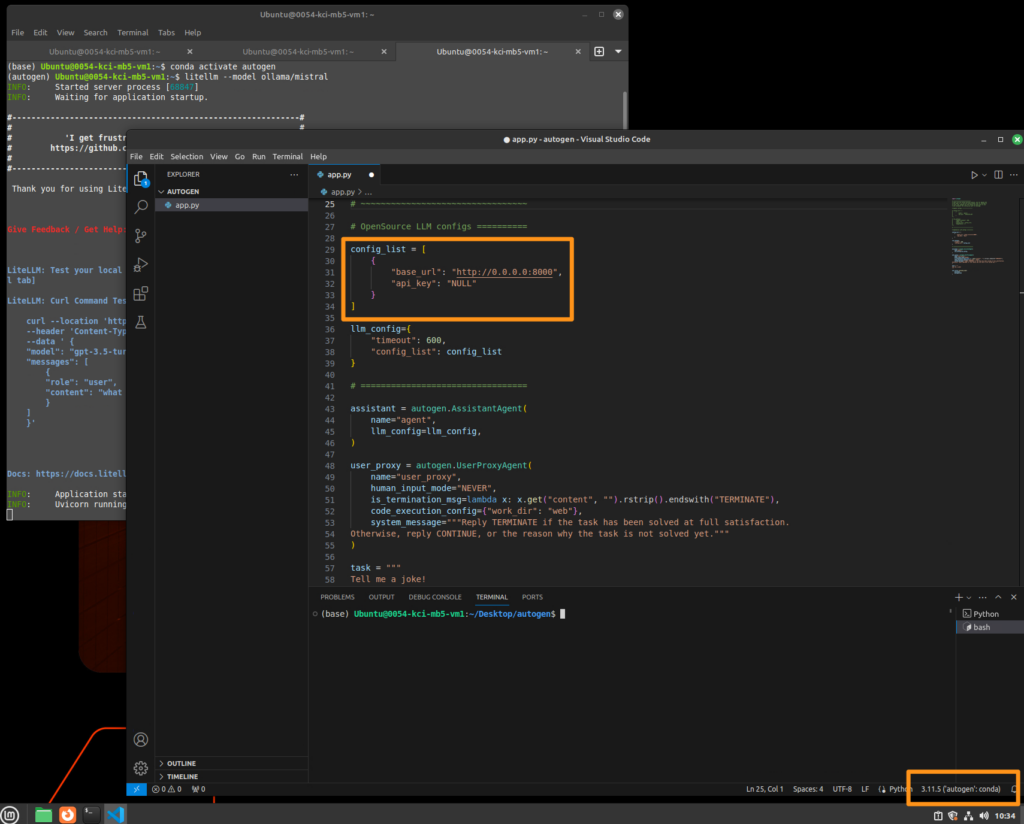

G. Now you can start LiteLLM and wrap the Ollama mistral model in an API. Run litellm --model ollama/mistral

H. Now you should be able to open Visual Studio Code and configure AutoGen using the `base_url` provided from the LiteLLM output.

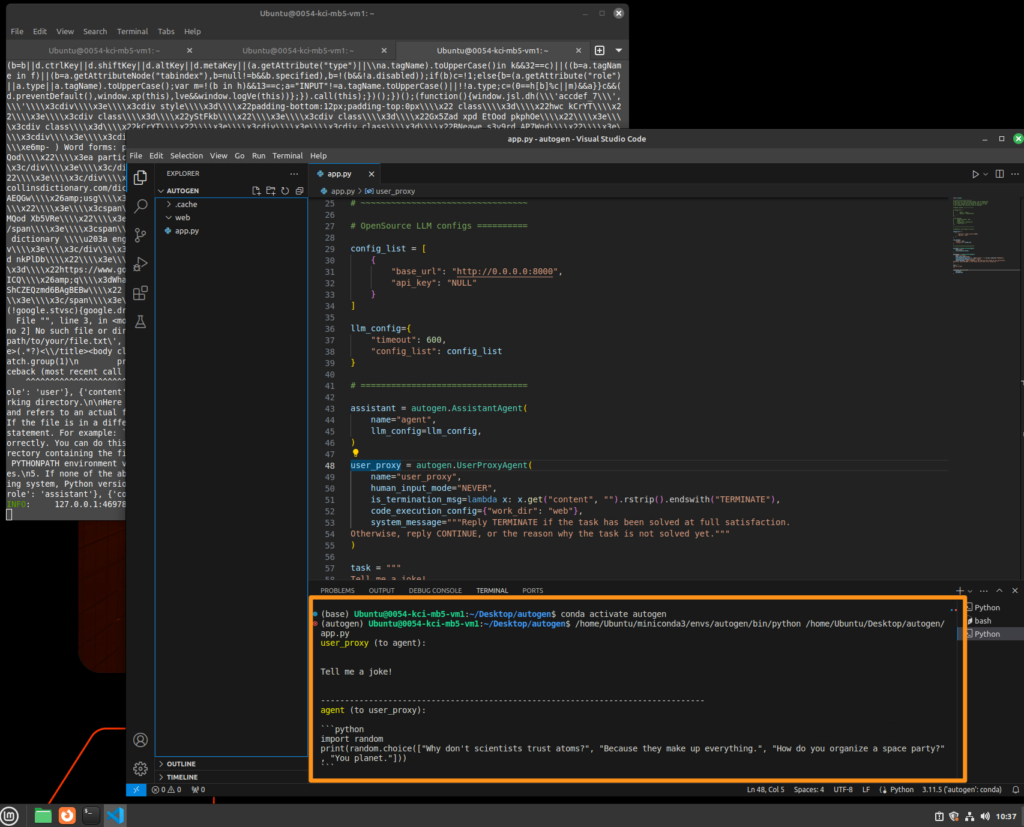

I. Now write your tasks and enjoy using AutoGen!

Enjoy Autogen

AutoGen can be incredibly powerful. Historically you would have to use an OpenAi API to leverage the technology but now there is a simple way to leverage other tools to use open source models. Rent a A6000 Virtual Machine today now and experience seamless performance at unbeatable prices.

Rent a VM Today!