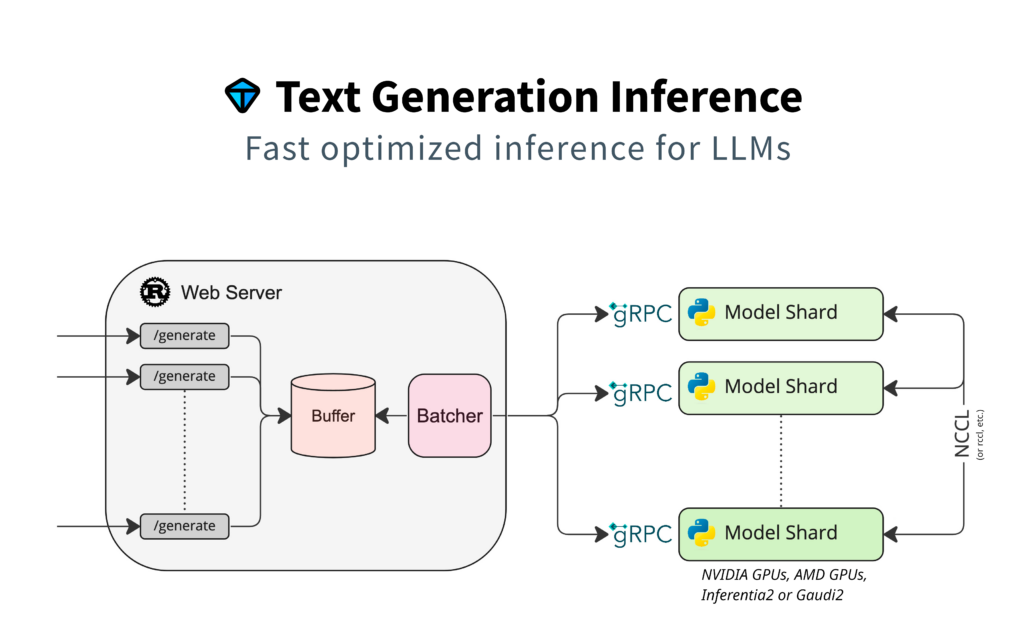

Introduction

Are you interested in setting up an inference endpoint for one of your favorite models? Have you been wanting to leverage the full unquantized version of models but found the process too complex or time-consuming? Do you wish there was a simple and efficient way to deploy full models for your own projects or applications? Look no further! We will show you how to leverage Hugging Face’s Transformers library and their Model Hub to deploy your very own large language model inference API in under 2 minutes. With just a few steps, and a pre-configured Virtual Machine, you’ll have an API to interact with a LLM in no time.

Rent a VM Today!Tools needed

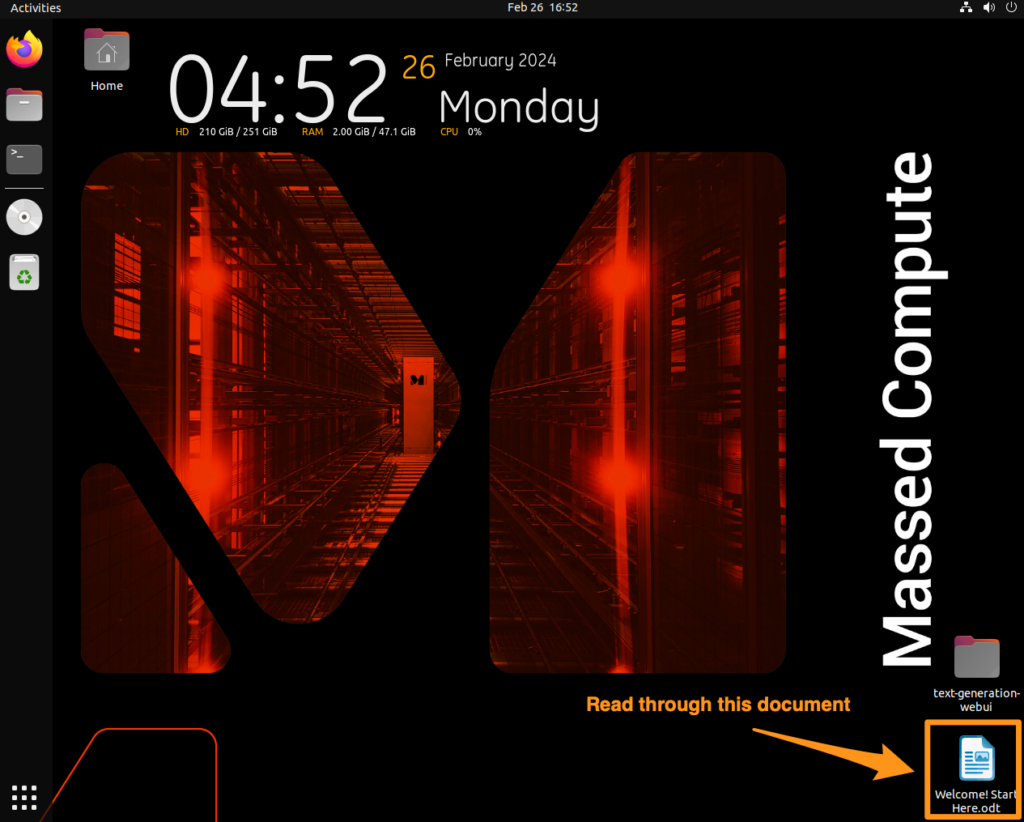

- A Massed Compute Virtual Machine

- Category: Bootstrap

- Image: LLM API

- Docker

Steps to get Setup

- Our Virtual Machine’s come pre-installed with Docker and have a Welcome doc providing the same steps in this post.

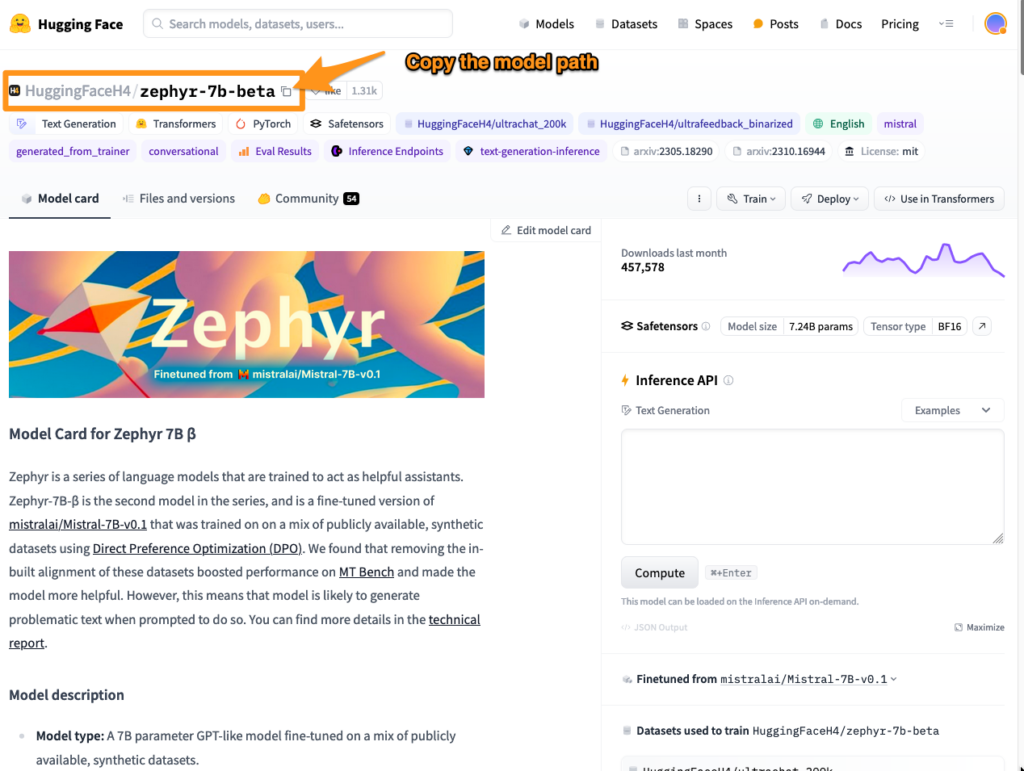

2. Navigate to Hugging Face and find a model of interest. In this example we are going to use Zephyr. On the model page copy the model id which we will use later.

3. Now for some work in the terminal. Run these commands in order

mkdir datavolume=$PWD/datamodel=HuggingFaceH4/zephyr-7b-beta

4. Then run the following to spin up a docker container serving the Zephyr model.

docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.4 --model-id $model- You may have to run `sudo` in front of the docker command

Some important pieces in this command

--gpus allThis means the model will be leveraging all GPUs available within the VM.-pThis is the Port the API request can be made to to hit the model--model-idThis is what model the container will load. We already set the $model variable above in step three.

There are many more parameters that you can learn about on Hugging Face’s website for Text Generation Inference.

Once the model is fully downloaded and ready to use, you can then make API requests against this model.

5. To make an API request against this model you will need a few variables.

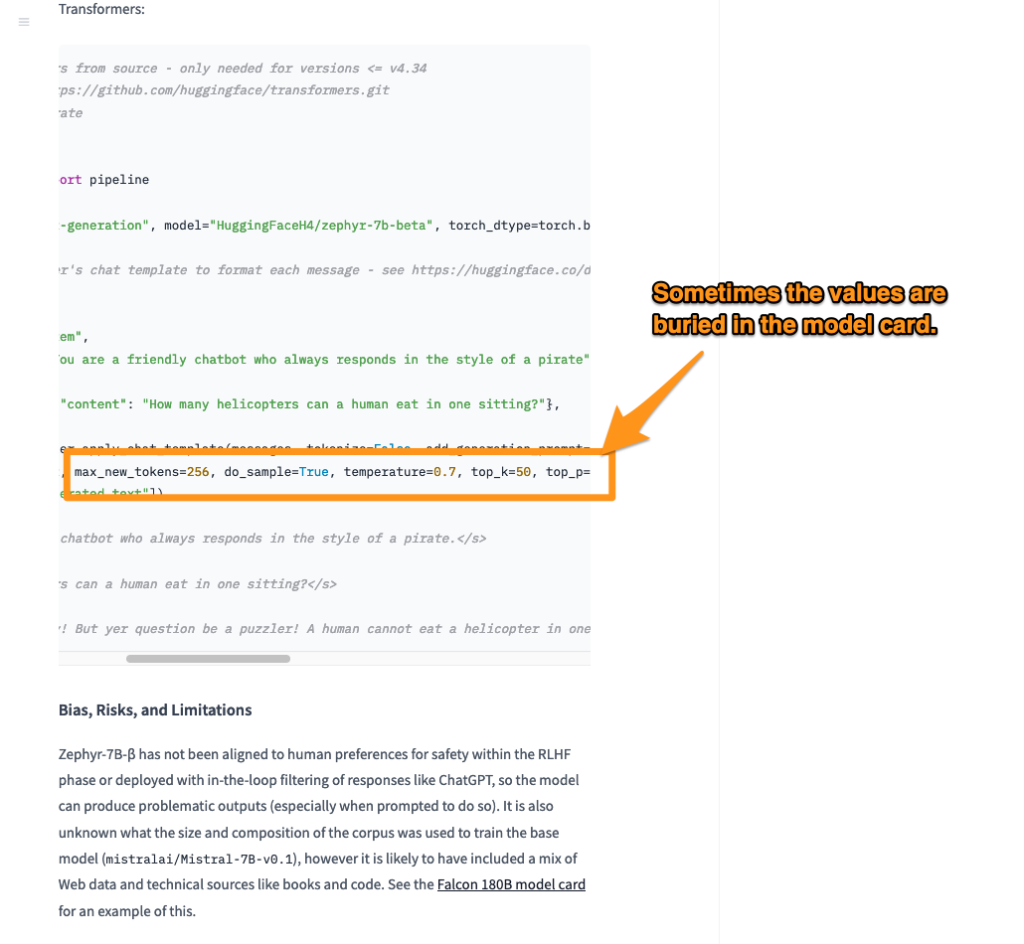

From the Model Card on Hugging Face

- max_new_tokens

- temperature

- top_k

- top_p

Some times these can be hard to find, but most model cards have this information available.

Information from other sources

Massed Compute VM IP– When you provision a VM you will be provided an IP addressDocker Container Port– In step 4 above we set what port the container is available on.

Pull it all together

curl -X POST

MASSED_COMPUTE_VM_IP:DOCKER_PORT/generate

-H 'Content-Type: application/json'

-d '{"inputs":"<|system|>You are a friendly chatbot.n<|user|>Why is the sky blue?n<|assistant|>","parameters":{"do_sample": true, "max_new_tokens": 256, "repetition_penalty": 1.15, "temperature": 0.7, "top_k": 50, "top_p": 0.95, "best_of": 1}}'

If you want to make stream api requests use the /generate_streamendpoint

curl -X POST

MASSED_COMPUTE_VM_IP:DOCKER_PORT/generate_stream

-H 'Content-Type: application/json'

-d '{"inputs":"<|system|>You are a friendly chatbot.n<|user|>Why is the sky blue?n<|assistant|>","parameters":{"do_sample": true, "max_new_tokens": 256, "repetition_penalty": 1.15, "temperature": 0.7, "top_k": 50, "top_p": 0.95, "best_of": 1}}'

6. Integrate your project with this new inference endpoint.

How can I see that my model is loaded?

A great question. There are some commands that you can use to review the VM hardware to make sure your model is loaded. The best command is nvitopThis command lets you review all the system resources

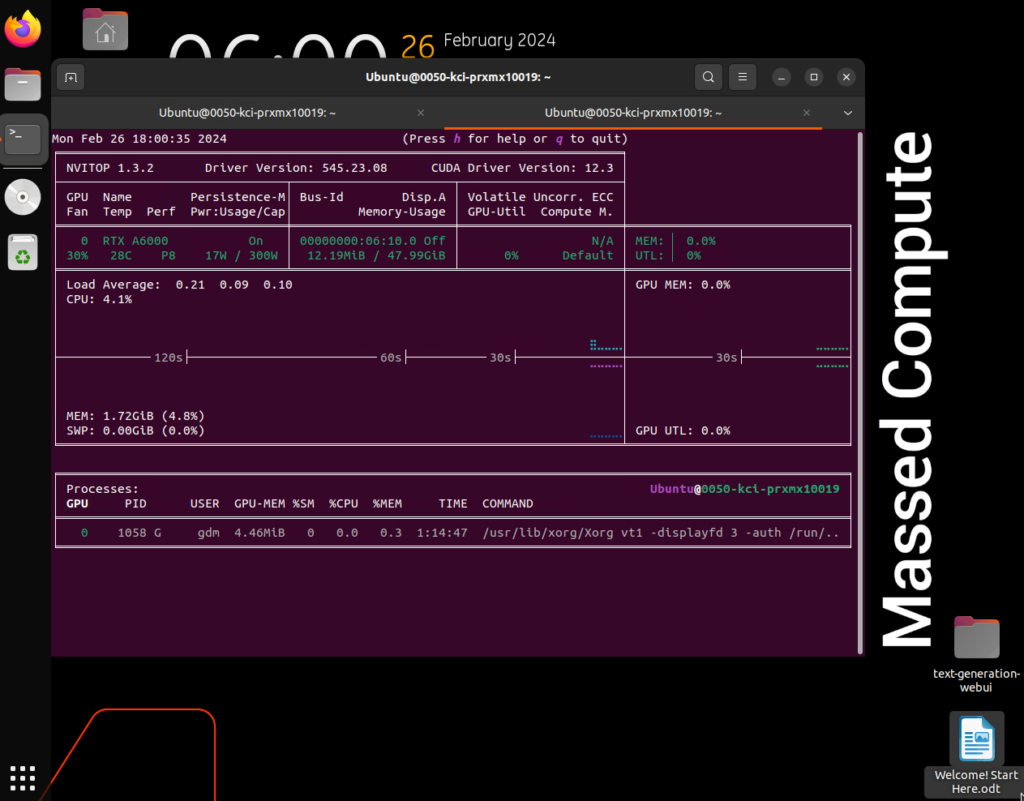

Before a model is loaded:

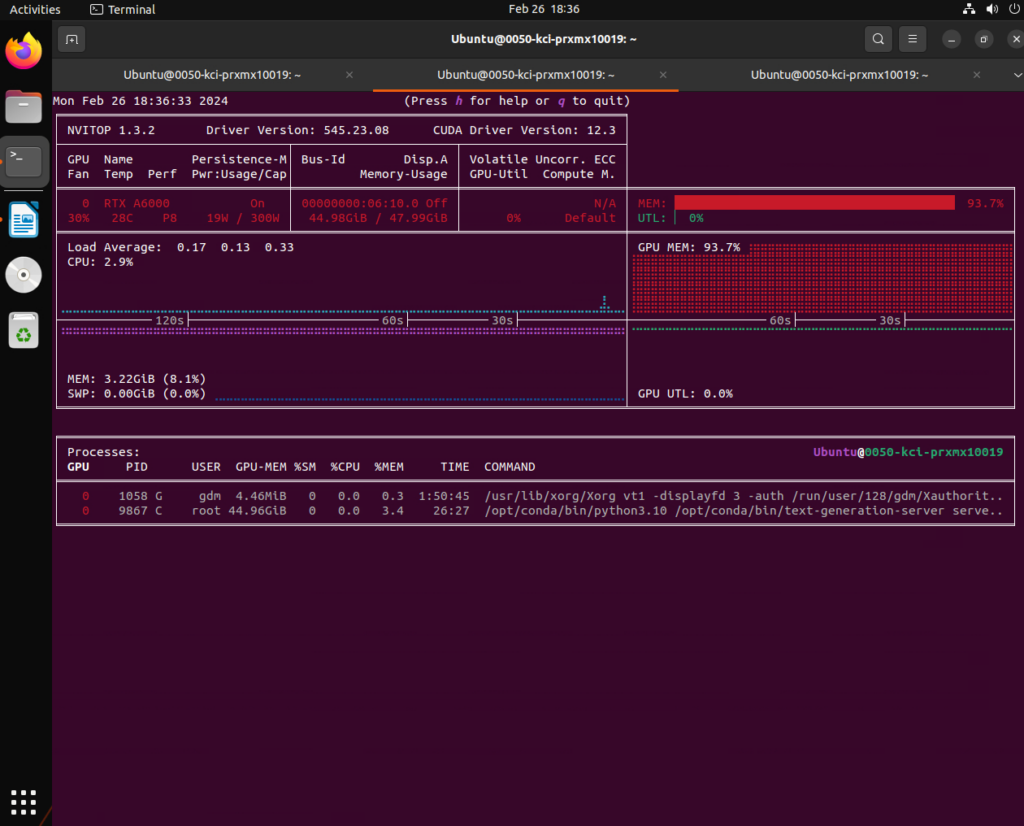

After a model is loaded. You can now see the GPU resources fully loaded the model.

Conclusion

Leveraging Hugging Face’s Text Generation Inference you can easily deploy full unquantized models in the matter of minutes. Leveraging the TGI docker container also allows you ultimate flexibility in case you want to swap models or spread multiple models across your hardware. In the next post we will look at how you can leverage the TGI docker containers to deploy multiple models when you have multiple GPUs. You can read more about that on our Part 2.

Rent a VM Today!