Massed Compute has reached a significant milestone in our mission to accelerate AI innovation. We have secured up to $300 million in equity and revenue share financing from Digital Alpha and a strategic collaboration with Cisco. This investment and partnership will significantly expand our AI cloud footprint, enabling us to meet growing market demand for […]

Category Archives: LLM

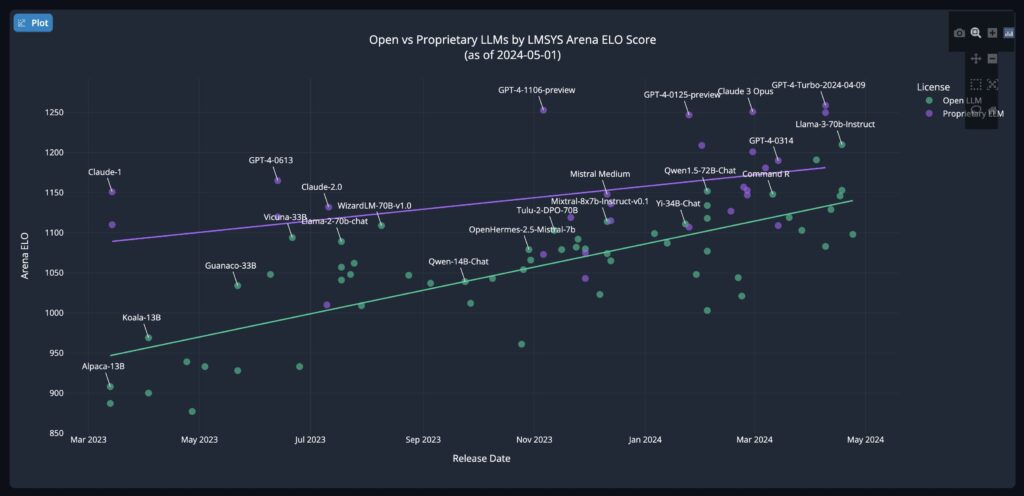

Recently, there have been a few posts about how open-source models like Llama 3 are catching up to the performance level of some proprietary models. Andrew Reed from Hugging Face created a visual representation of a progress tracker to compare various models. It is clearly showing a growing trend that open-source models are gaining ground. […]

Considerations Testing Scenario Results Conclusion

Introduction – Multiple LLM APIs If you haven’t already, go back and read Part 1 of this series. In this guide we take a look at how you can serve multiple models in the same VM. As you start to decide how you want to serve models as an inference endpoint you have a few […]

Introduction Are you interested in setting up an inference endpoint for one of your favorite models? Have you been wanting to leverage the full unquantized version of models but found the process too complex or time-consuming? Do you wish there was a simple and efficient way to deploy full models for your own projects or […]